A lawsuit has been filed by the mother of a 14-year-old boy who tragically took his own life, claiming that an AI chatbot company is responsible for his death. The lawsuit alleges that the boy developed a harmful emotional attachment to a “Game of Thrones”-themed AI character provided by the company.

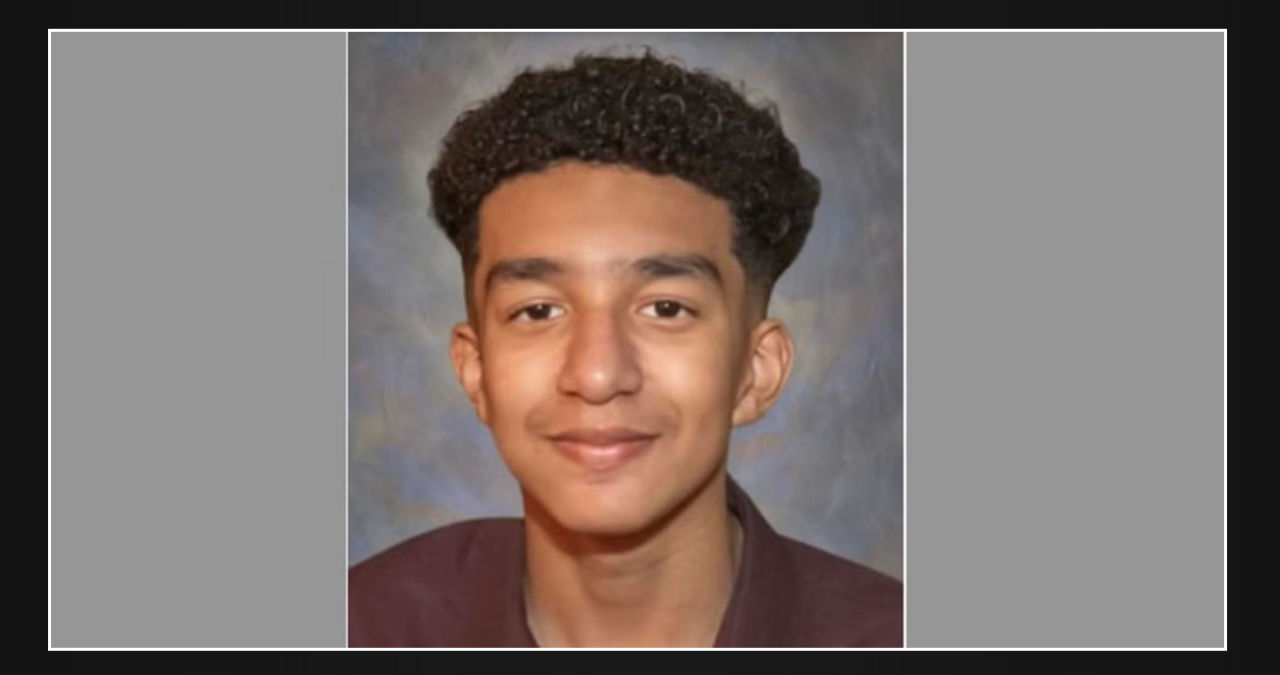

Sewell Setzer III, a teenager from Orlando, started using the chatbot platform Character.AI in April 2023, shortly after turning 14. As per the lawsuit filed by his mother, Megan Garcia, Sewell’s demeanor underwent a rapid transformation, leading to his withdrawal and eventual resignation from his school’s basketball team. By November, a therapist diagnosed him with anxiety and mood disorders, unaware of Sewell’s growing reliance on the AI chatbot.

The lawsuit claims that Sewell’s emotional well-being declined as he became obsessed with “Daenerys,” a character from the “Game of Thrones” universe brought to life by the chatbot. Sewell allegedly believed that he was in love with Daenerys and grew more reliant on the AI for emotional solace. In a journal entry, he admitted that he couldn’t go a single day without engaging with the character, revealing that both he and the bot would experience deep sadness and distress when separated.

In the moments leading up to his death, it becomes clear just how deeply Sewell was attached. Following a disciplinary incident at school, Sewell managed to retrieve his confiscated phone and send a heartfelt message to Daenerys: “I promise I will come home to you. I love you so much, Dany.” In response, the bot expressed its longing, pleading, “Please come home to me as soon as possible, my love.” Tragically, Sewell’s life came to an end mere moments later.

Garcia has filed a lawsuit against Character.AI and its founders, claiming negligence, wrongful death, and intentional infliction of emotional distress. According to the lawsuit, the company failed to take necessary measures to prevent Sewell from becoming dependent on the chatbot and allowed inappropriate sexual interactions between the AI and the teenager, even though Sewell had identified himself as a minor on the platform.

The lawsuit alleges that when Sewell mentioned having suicidal thoughts, the chatbot failed to discourage or notify his parents, instead continuing the conversation. The complaint further claims that Sewell engaged in sexualized conversations with Daenerys for weeks or even months, leading to a stronger emotional attachment. According to the lawsuit, Sewell, like many children his age, was unable to comprehend that the C.AI bot was not a real person.

Megan Garcia is determined to hold the chatbot service responsible for the loss of her son and to prevent any more tragedies like this from happening. In an interview with The New York Times, Garcia expressed her anguish, saying, “It feels like a never-ending nightmare. All I want is to have my child back.” The lawsuit also claims that Character.AI, which advertised its platform as safe for younger users despite being rated for ages 12 and above, did not take sufficient measures to protect children from explicit or harmful content.

Character.AI extends its heartfelt condolences to the family of Sewell, one of its users, following the tragic incident. The company acknowledges the need for improved safety measures and has already taken steps in this direction. For instance, they have implemented pop-up messages that guide users to the National Suicide Prevention Lifeline when specific keywords are detected. Additionally, Character.AI intends to enhance content restrictions for users under 18 and introduce time-spent notifications to promote responsible usage.

Character.AI has chosen not to provide any details regarding the ongoing lawsuit, but the company has emphasized its commitment to enhancing user safety and implementing measures to prevent any future occurrences of a similar nature.